In most decisions including investing, there are two ways to be wrong:

Doing something that doesn’t work (false positive, type 1 error)

Not doing something that would have worked (false negative, type 2 error)

Investors and quants in particular worry more about the type 1 error - accepting a fake result thinking it is real. However there is another type of error that lurks behind - the type 2 error - rejecting a real result, thinking it is fake.

Contrary to the almost universal belief that type 1 errors in the form of data-mining are the worst thing that can ever happen to quants (latest example), I believe that type 2 errors are much worse because they end up producing undifferentiated and overcrowded models without innovation, and hence no alpha.

Let me explain:

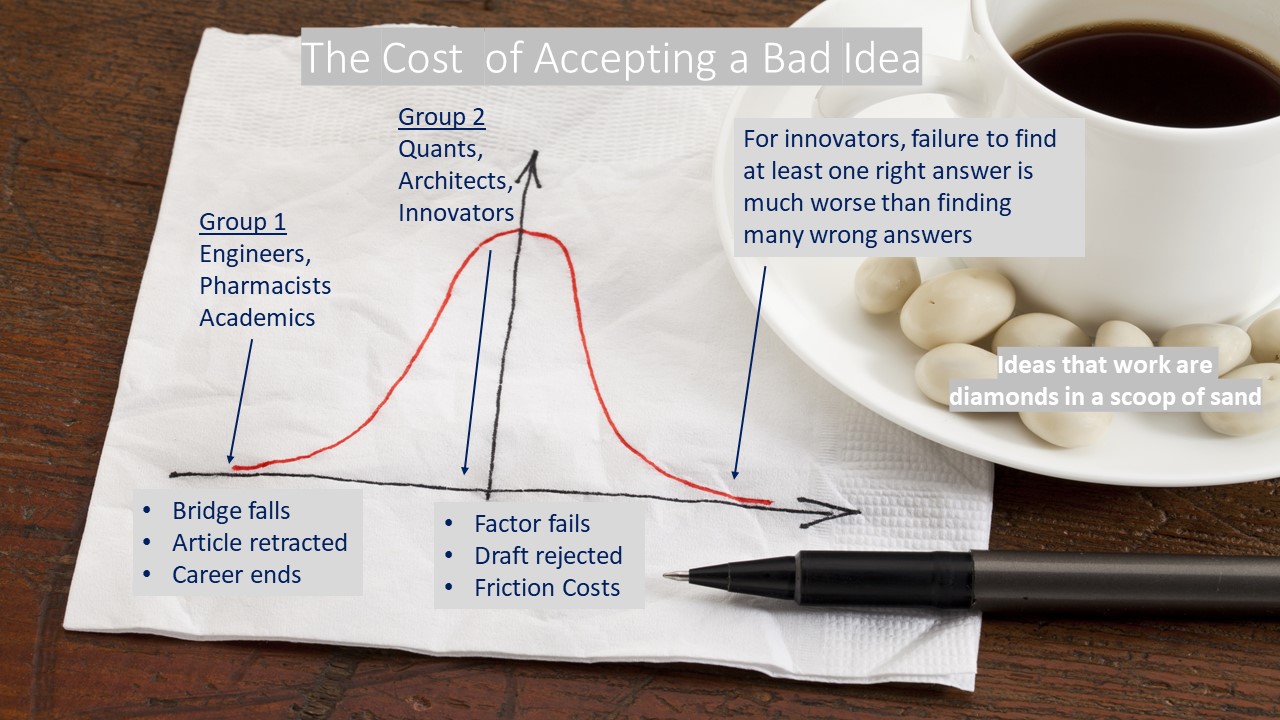

There appear two groups of professional tasks:

The first group is where type 1 error has very large negative costs because it might produce fatal outcomes. For example, if you are building a bridge, following instructions is essential, so the bridge remains intact when the first truck drives over it. The same goes for prescribing drugs. There is not much room for experimenting. To rephrase Nassim Taleb’s “Skin In the Game” concept: it’s hard to take an average across some bridges that worked, and others that fell apart. In group 1 tasks, type 1 errors should be avoided at all costs.

However there is a second group of tasks where type 1 errors don’t have such negative costs but type 2 errors do. For example if you needed to invent a more efficient bridge, or discover a better treatment, then rejecting a potentially great solution is actually a real failure. This group of tasks is creativity-focused and is often carried out by the architects, the artists, the writers, the innovators, the scientists, and the entrepreneurs. For them, the cost of type 1 error is much lower - a few hours to sketch a new design, draft an idea, another lab experiment and sunk start up costs. On the other hand, type 2 errors cost them a lot. Failing to solve new problems, to innovate, invent, and create can be a ‘career fatal’ result. In group 2 tasks, one correct answer is worth many wrong ones along the way.

Which group does the testing of a new investment strategy fall into?

Consider, the cost of type 1 error to a quant who accepts a ‘fake’ factor - ‘p-hacking’ as it is sometimes called? The expected return of a fake factor is randomness minus transaction costs (for example see Cliff Asness’ introduction to Andrew Berkin and Larry Swedroe’s “Your Complete Guide to Factor-Based Investing”). The expected outcome is just slightly negative (trading costs only). On the other hand, what is the cost of rejecting factors that actually would have delivered a statistically positive return? The cost is positive Alpha in the overall model.

Most quants have been trained in group 1 tasks; however, building a model to forecast the future has much more in common with the second group. While making a type 1 error dilutes the value of the good ideas and wastes friction costs - it is not a 'fatal’ price to pay in order to avoid type 2 errors - rejecting positive ideas. Unfortunately, the more one tries to decrease the type 1 error, the higher type 2 error becomes - a common quant practice of using t-statistics does exactly that (for a great recent technical review of this see Marcos Lopez de Prado’s paper).

Idea selection is like picking up a handful of stones, some have hidden diamonds inside, others are just dust. If you over-estimate how accurate your thinking is (because of ‘over-confidence’, and ‘career-safe factor’ biases), you will select less stones than optimal and potentially miss the diamonds. Unlike averaging good and bad bridges, averaging good and bad factors gets the model in the direction of its objective, which is to deliver a positive return.

Pure unsupervised data-mining without thinking is of course dangerous, but traditional (not machine learning) quants have moved so far into the type 1 corner that I believe moving back towards ‘idea mining’ or what group 2 professions call ‘creative thinking’ and ‘experimenting’, will produce much better out-of-sample results.

Great investors recognize that the opportunities they missed are as important as the bad investments they made. For example, this list of Buffett’s biggest mistakes includes several type 2 errors (like not buying Google and Amazon). And speaking of Amazon, I’ll end with a quote from a new book that profiles their approach to risk - an example of group 2 thinking:

“Shrewdly and publicly, Mr. Bezos bifurcates Amazon’s risk-taking into two types:

1) Those you can’t walk back from (“This is the future of the company”), and 2) Those you can (“This isn’t working, we’re out of here”). Bezos’s view is that it’s key to Amazon’s investment strategy to take on many Type 2 experiments—including a flying warehouse or systems that protect drones from bow and arrow. They’ve filed patents for both. Type 2 investments are cheap, because they likely will be killed before they waste too much money, and they pay big dividends in image building as a leading-edge company” - Scott Galloway, The Four: The Hidden DNA of Amazon, Apple, Facebook, and Google

In sum, I believe the reason why traditional quants (and many other investors), do not deliver lasting Alpha is not because of too much data-mining, but because of the lack of a personalized innovation process to source and implement new ideas.